Serverless is a crucially better way to develop and deploy, well, anything. But what actually is serverless, and what does it do for developers? Learning the four fundamental principles of serverless lets you cut through the hype to evaluate whether any tech claiming to be “serverless” is actually delivering on its promises.

When first introduced, the public clouds revolutionized how quickly we can build and deploy apps, and with lower infra costs. Simply put, serverless is the next logical evolution of the cloud computing paradigm, because it enables even more efficient use of limited resources: time, money, and developer brainpower.

- Serverless equals drastically faster time to value for developers because the platform abstracts and automates many hands-on tasks so devs can simply focus on building features and services.

- Serverless allows consumption-based billing — basically metered usage where you only pay for the compute you actually use.

At the same time, though, it can be hard to pin down a solid understanding of what “serverless” actually signifies. It doesn’t help that the name is an oxymoron — yes, serverless computing does still involve actual servers — and that there is no one “official” definition. This allows vendors to slap the name “serverless” on all kinds of platforms and services these days, hoping to hop on the buzzword bandwagon. But what do these actually do? Seriously, WTF is serverless?

The simplest way to cut through the hype is to understand the defining principles of serverless. Think of them as a cheat sheet for evaluating anything calling itself “serverless” to find what it actually delivers.

The four fundamental principles of serverless

What makes anything serverless — a platform, an app, a database — serverless? There are four things that any entity calling itself “serverless” needs to provide.

-

Hands-off (server) service

Despite the name, serverless doesn’t refer to literally getting rid of hardware. Instead, the term is meant to signify getting rid of all the worries involved with running the hardware by letting the serverless platform handle as much of the hands-on particulars as possible.

Serverless saves time and work for both sides of DevOps. For Ops, the obvious win is that it takes on the work of building out, configuring and managing the hardware — tedious manual chores like keeping the operating system patched and updated, configuring the firewall, and fiddling with the drivers. For devs, a serverless application means a simple API endpoint in the cloud and the availability of serverless functions (a.k.a. “functions-as-a-service”). These are simple functions that independently perform a single purpose, like making an API call. Both allow devs to just get to work building actual features and functionality.

In other words: Serverless, code more.

-

Automated elastic scale

Serveless means never having to worry about whether your app or database can handle a sudden spike in usage. In serverless application architecture, components scale up and down automatically to meet demand. This includes scaling all the way down to zero when not in use, and spinning up again instantly when called upon.

(Real talk: the scalability of serverless can sometimes result in “cold starts”, or the start up time required to “warm up” a new serverless worker to execute your code when it hasn’t been called in awhile. When this happens there can be latency, from a few hundred milliseconds up to several seconds, until functions become operational. However, this is relatively rare — for example, AWS says fewer than 0.25% of its Lambda serverless compute service invocations are cold starts).

So, think of serverless as getting the benefits of distributed application architecture without the need to for getting hands-on with complex tech like containers and Kubernetes. A serverless platform abstracts away that complexity (and automates as much of it as possible).

-

Consumption-based billing

Serverless platforms and other models, such as complex infrastructure-as-a-service like serverless databases, make it possible to responsively provision hardware only when needed. Serverless helps automate the elasticity of demand in compute, so you only utilize compute for what you’re actively using – and only pay for what you use, when you use it. No overprovisioning to handle unpredictable traffic spikes, no need to run behind load balancers. A serverless system scales up when demand surges and then spins itself back down when it drops again.

And when demand drops to zero, so does your consumption. Serverless means never paying for storage and compute you’re not using. Serverless enables consumption-based billing only for resources you actually used, and in addition allows limit setting so that you never overrun your budget. This means serverless is ideal for big, intermittent workloads since there’s no charge when the platform isn’t in use.

-

Built-in resilience and fault tolerance

Another power of the intelligent automation inherent in serverless: the potential to create “self-healing” applications. Serverless makes it possible to architect applications for high resiliency and graceful failover, thanks to automated error resolution.

No matter where you sit on the DevOps team, serverless means an application that can handle whatever the world throws your way because it automatically picks itself up, dusts itself off, and just keeps on working.

Elite tech for the non-FAANG org

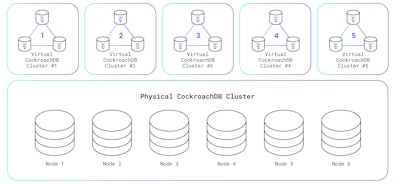

Serverless is the best way to serve any ambitious, fast-growing company of any size. Even the largest companies in the world have many things they build both internally and externally that don’t need three nodes, or even one node – maybe just 1/10th of one node. Small orgs and startups need the same thing from a different direction: the ability to start small but automatically and horizontally scale as needed, without shocking end of the month invoices from their cloud provider. This is why a multitenant serverless consumption model is a beautiful thing for just about any organization in any sector.

The really cool thing about serverless, actually, is that it democratizes some very high-caliber tech. Complex distributed systems solutions that were once available only to tech giants like Google, Netflix or Amazon are now accessible for smaller organizations and startups. Not just literally accessible, as in “you too can use the same platform as [insert FAANG here].” But truly accessible as in, you can actually build and deploy distributed applications with a small dev team and limited DevOps/SRE resources.

Serverless example in the real world

Let’s look at a common real-world developer task: connecting two applications together. Say you want to get automatic notifications in Slack whenever a new star gets added to one of your GitHub repositories.

Trying to do this integration in the traditional non-serverless way, a dev first has to provision a server to receive the inbound webhook from GitHub. Already this is a potential speedbump, depending on your org’s permissions, processes, and whether this means opening a ticket with ops.

Next, this server must be set up to (1) always be available to receive the inbound GitHub webhook, and (2) either replicated and set up behind a load balancer, or configured with enough headroom to handle potential spikes in traffic. The server also must allow public internet access plus any additional authorization for the inbound GitHub webhook. Finally, the entire application server, operating system and runtime will require ongoing management and updates/patching.

The same process in serverless: Using a serverless function, you can quickly create endpoints to handle inbound GitHub webhooks, run your logic on the webhook payload, and then hand off the result to the receiving service, Slack, for execution. That’s it. Everything else is handled behind the scenes.

The value of serverless is letting the platform handle and automate all these tedious but necessary tasks while the humans get to concentrate on building the actual features and services that are the whole reason your business exists — and deploying them to your cloud platform with a single command.

Architecting differently

As promising and powerful as the serverless model is, it does require a different approach to application architecture.

On the front end, where developers can mainly conceive and work with serverless as an API in the cloud and functions-as-a-service, architecture is less of concern. For full stack and back end software developers, however, serverless is best understood as a core ingredient in a component architecture or microservices approach. You use a small slice of a server to do something specific (run one function, serve relational data, handle API calls, etc) in a way where you don’t have to manage specific servers. You just use resources and it scales and handles failures seamlessly behind the scenes.

To work within a serverless architecture, then, you can’t simply plan to run an entire app on one server. You have to break the app down into discrete services, and they serve and host interchangeably with each other. So a serverless approach in this full stack context allows you to easily define those relationships between microservices without having to worry about failover and scaling and bottlenecking, like you do with traditional servers. But it is inherently more complex and requires a new way of thinking — a distributed mindset.

Basically, software developers can conceive of serverless as being like a platform for building tiny *-as-a-service modules (fill in the “ * “ with all the different Lego pieces you need for building your application) for the back end. Unfortunately, this model does not work with traditional software architecture patterns.

High-resiliency serverless applications with automated error resolution don’t just happen, alas.

They require either (1) re-architecting existing software or (2) building software as serverless from the start. Building from scratch is usually best and certainly simpler, which is why serverless can be best for startups or greenfield projects. Enterprises with a lot of custom legacy architecture need to evaluate and quantify the benefits they hope to gain from going serverless against the costs of time and resources it could take to re-architect in order to capture those benefits.

One of the superpowers of serverless is that it’s fully customizable to your application’s precise requirements. However, this flexibility can also become a potential drawback if, later on, you need to change your application architecture. Serverless adds complexity in this situation because it requires you to change not just the code itself, but also make sure it doesn’t screw up the serverless config. Because ideally you’re customizing it to that app’s needs, so when the needs change, so do the corresponding services.

Perhaps, again, it’s helpful to think in terms of Lego blocks. I set my AWS Lambda function to run with X amount of memory and Y timeout. Then a product feature change requires updating that function. I need to make sure that I’m not over or underprovisioning again. But you just have to swap in the new function with the new config for the old one. It’s all abstracted from your data layer or API layer or anything else, because ultimately all that the connected microservices ever see of each other is the same requests, regardless.

Serverless is here. For real.

We’ve been talking about serverless as the next great future thing — “Cloud 2.0” — for long enough that the inevitable backlash around any new tech (the infamous “trough of disillusionment”) has begun: Right now, some people say serverless has been overhyped. Typically, though, what they are talking about is the first generation of serverless, the execution side. Things like Lamba or Google Cloud Run or Fargate, which are all just products that mean you don’t have to manage VMs; instead, you can just put your application logic in the cloud and Google or Amazon will run it for you and scale it for you. First gen serverless consisted of these imperative tools that allowed you to take your old code and hide it away behind one function that would act as a trigger. But serverless now has expanded — a lot.

In a way it’s a good thing we’ve reached the “yeah, that’s so last year” dismissive stage among early adopters with serverless, because when that happens with technology it’s typically the sign that it’s now crossing into widespread usage. Results from a recent Red Hat + Cockroach Labs survey on serverless, multi-cloud and Kubernetes adoption trends show that 88% of organizations surveyed are either actively pursuing serverless strategy (49%) or already using it (39%).

We have reached the event horizon where serverless is no longer some hypothetical future but rapidly becoming the right way to do things now. And knowing the four principles of serverless will make sure that any “serverless” offering you may contemplate will truly allow you to server less, code more.